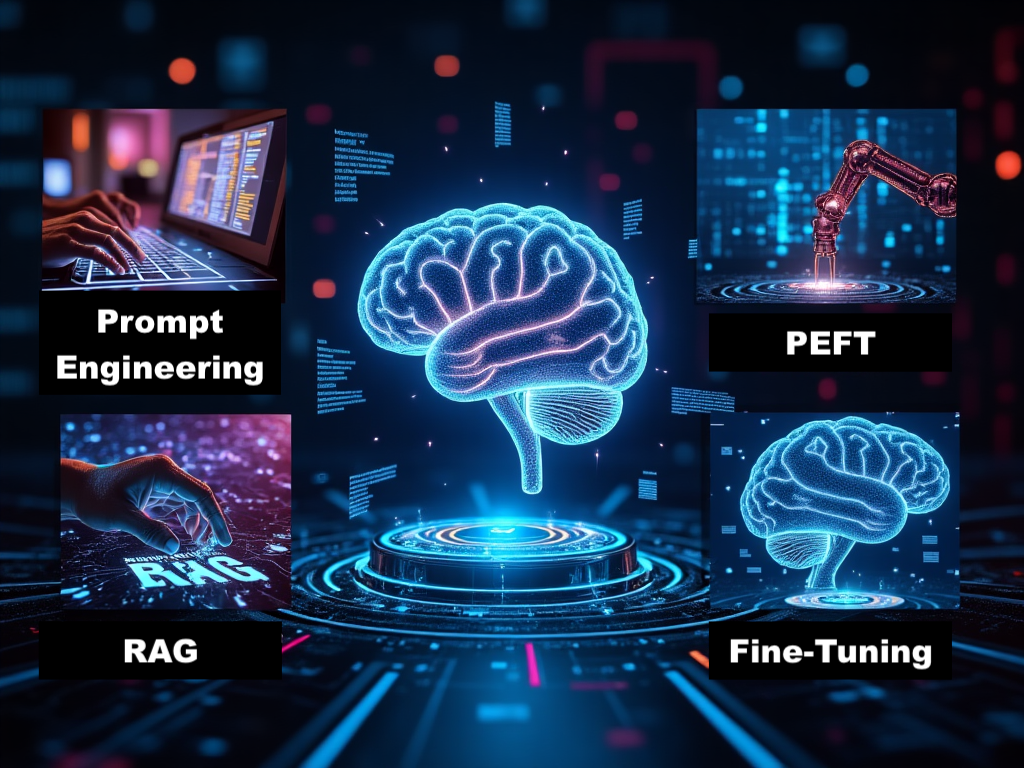

Full Fine-Tuning the traditional method for adapting large language models (LLMs), involving updates to all of the model’s parameters. While more resource-intensive than parameter-efficient fine-tuning (PEFT) and other methods, it allows for deeper and more comprehensive customization, especially when adapting to significantly different tasks or domains.

Read More